4. Provisioning Infra Using Terraform

There are multiple options available to deploy the solution to the cloud. Here we provide the steps to use terraform Infra-as-code.

Topics covered:

Overview

Before we provision the cloud resources, we need to understand and be sure about what resources need to be provisioned by terraform to deploy DIGIT. The following picture shows the various key components. (EKS, Worker Nodes, PostGres DB, EBS Volumes, Load Balancer).

Considering the above deployment architecture, the following is the resource graph that we are going to provision using terraform in a standard way so that every time and for every env, it'll have the same infra.

EKS Control Plane (Kubernetes Master)

Work node group (VMs with the estimated number of vCPUs, Memory)

EBS Volumes (Persistent Volumes)

RDS (PostGres)

VPCs (Private network)

Users to access, deploy and read-only

Pre-requisites

(Optional) Create your own keybase key before you run the terraform

Use this URL https://keybase.io/ to create your own PGP key, this will create both public and private keys in your machine, upload the public key into the keybase account that you have just created, and give a name to it and ensure that you mention that in your terraform. This allows encrypting of all sensitive information.

Example user keybase user in eGov case is "egovterraform" needs to be created and has to upload the public key here - https://keybase.io/egovterraform/pgp_keys.asc

you can use this portal to Decrypt your secret key. To decrypt PGP Message, Upload the PGP Message, PGP Private Key and Passphrase.

Fork the DIGIT-DevOps repository into your organization account using the GitHub web portal. Make sure to add the right users to the repository. Clone the forked DIGIT-DevOps repository (not the egovernments one). Navigate to the

sample-awsdirectory which contains the sample AWS infra provisioning script.

The sample-aws terraform script is provided as a helper/guide. An experienced DevOps can choose to modify or customize this as per the organization's infra needs.

Create Terraform backend to specify the location of the backend Terraform state file on S3 and the DynamoDB table used for the state file locking. This step is optional. S3 buckets have to be created outside of the Terraform script.

The remote state is simply storing that state file remotely, rather than on your local filesystem. In an enterprise project and/or if Terraform is used by a team, it is recommended to set up and use the remote state.

In the sample-aws/remote-state/main.tf file, specify the s3 bucket to store all the states of the execution to keep track.

2. Network Infrastructure Setup

The terraform script once executed performs all of the below infrastructure setups.

Amazon EKS requires subnets must be in at least two different availability zones.

Create AWS VPC (Virtual Private Cloud).

Create two public and two private Subnets in different availability zones.

Create an Internet Gateway to provide internet access for services within VPC.

Create NAT Gateway in public subnets. It is used in private subnets to allow services to connect to the internet.

Create Routing Tables and associate subnets with them. Add required routing rules.

Create Security Groups and associate subnets with them. Add required routing rules.

EKS cluster setup

The main.tf inside the sample-aws folder contains the detailed resource definitions that need to be provisioned, please have a look at it.

3. Custom Variables/Configurations

Navigate to the directory: DIGIT-DevOps/Infra-as-code/terraform/sample-aws. Configurations are defined in variables.tf and provide the environment-specific cloud requirements.

Following are the values that you need to replace in the following files. The blank ones will be prompted for inputs while execution.

cluster_name - provide your EKS cluster name here.

availability_zones - This is a comma-separated list. If you would like your infra to have multi-AZ setup, please provide multiple zones here. If you provide a single zone, all infra will be provisioned within that zone. For example:

3. bucket_name - if you've created a special S3 bucket to store Terraform state.

4. dbname - Any DB name of your choice. Note that this CANNOT have hyphens or other special characters. Underscore is permitted. Example: digit_test

All other variables are default and can be modified if the admin is knowledgeable about it.

5. In the providers.tf file in the same directory, modify the "profile" variable to point to the AWS profile that was created in Step 3.

Make sure your AWS session tokens are up to date in the /user/home/.aws/credentials file

Before running Terraform, make sure to clean up .terraform.lock.hcl, .terraform, terraform.tfstate files if you are starting from scratch.

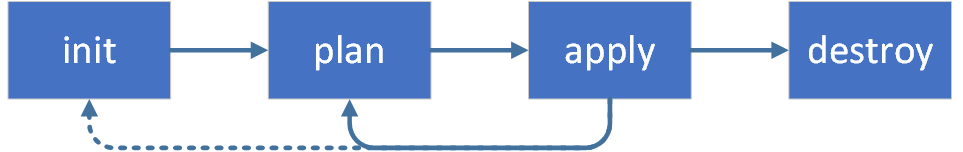

Terraform Execution: Infrastructure Resources Provisioning

Once you have finished declaring the resources, you can deploy all resources.

Let's begin to run the terraform scripts to provision infra required to Deploy DIGIT on AWS.

First CD into the following directory and run the following command to create the remote state.

Once the remote state is created, you are ready to provision DIGIT infra. Please run the following commands:

Important:

DB password will be asked for in the application stage. Please remember the password you have provided. It should be at least 8 characters long. Otherwise, RDS provisioning will fail.

The output of the apply command will be displayed on the console. Store this in a file somewhere. Values from this file will be used in the next step of deployment.

2. Use this link to get the kubeconfig from EKS to get the kubeconfig file for the cluster. The region code is the default region provided in the availability zones in variables.tf. Eg. ap-south-1. EKS cluster name also should've been filled in variables.tf.

3. Finally, verify that you are able to connect to the cluster by running the following command

At this point, your basic infra has been provisioned. Please move to the next step to install DIGIT.

Destroying Infra

To destroy previously-created infrastructure with Terraform, run the command below:

ELB is not deployed via Terraform. ELB has created at deployment time by the setup of Kubernetes Ingress. This has to be deleted manually by deleting the ingress service.

kubectl delete deployment nginx-ingress-controller -n <namespace>kubectl delete svc nginx-ingress-controller -n <namespace>Note: Namespace can be one of egov or jenkins.

Delete S3 buckets manually from the AWS console and also verify if ELB got deleted.

In case of if ELB is not deleted, you need to delete ELB from AWS console.

Run

terraform destroy.

Sometimes all artefacts that are associated with a deployment cannot be deleted through Terraform. For example, RDS instances might have to be deleted manually. It is recommended to log in to the AWS management console and look through the infra to delete any remnants.

Last updated

Was this helpful?