Logging

logging solution in Kubernetes with ECK Operator

In this article, we’ll deploy ECK Operator using helm to the Kubernetes cluster and build a quick-ready solution for logging using Elasticsearch, Kibana, and Filebeat.

What is ECK?

Built on the Kubernetes Operator pattern, Elastic Cloud on Kubernetes (ECK) extends the basic Kubernetes orchestration capabilities to support the setup and management of Elasticsearch, Kibana, APM Server, Enterprise Search, Beats, Elastic Agent, and Elastic Maps Server on Kubernetes.

With Elastic Cloud on Kubernetes, we can streamline critical operations, such as:

Managing and monitoring multiple clusters

Scaling cluster capacity and storage

Performing safe configuration changes through rolling upgrades

Securing clusters with TLS certificates

Setting up hot-warm-cold architectures with availability zone awareness

Install ECK

In this case we use helmfile to manage the helm deployments: helmfile.yaml

2. But we can do that just with helm: Installation using helm

After that we can see that the ECK pod is running:

The pod is up and running

Creating Elasticsearch, Kibana, and Filebeat resources

There are a lot of different applications in Elastic Stack, such as:

Elasticsearch

Kibana

Beats (Filebeat/Metricbeat)

APM Server

Elastic Maps

etc

In our case, we’ll use only the first three of them, because we just want to deploy a classical EFK stack.

Let’s deploy the following in the order:

Elasticsearch cluster: This cluster has 3 nodes, each node with 100Gi of persistent storage, and intercommunication with a self-signed TLS-certificate.

2. The next one is Kibana: Very simple, just referencing Kibana object to Elasticsearch in a simple way.

3. The next one is Filebeat: This manifest contains DaemonSet used by Filebeat and some ServiceAccount stuff.

Testing

First of all, let’s get Kibana’s password: This password will be used to log in to Kibana

2. Running port-forward to Kibana service: Port 5601 is forwarded to localhost

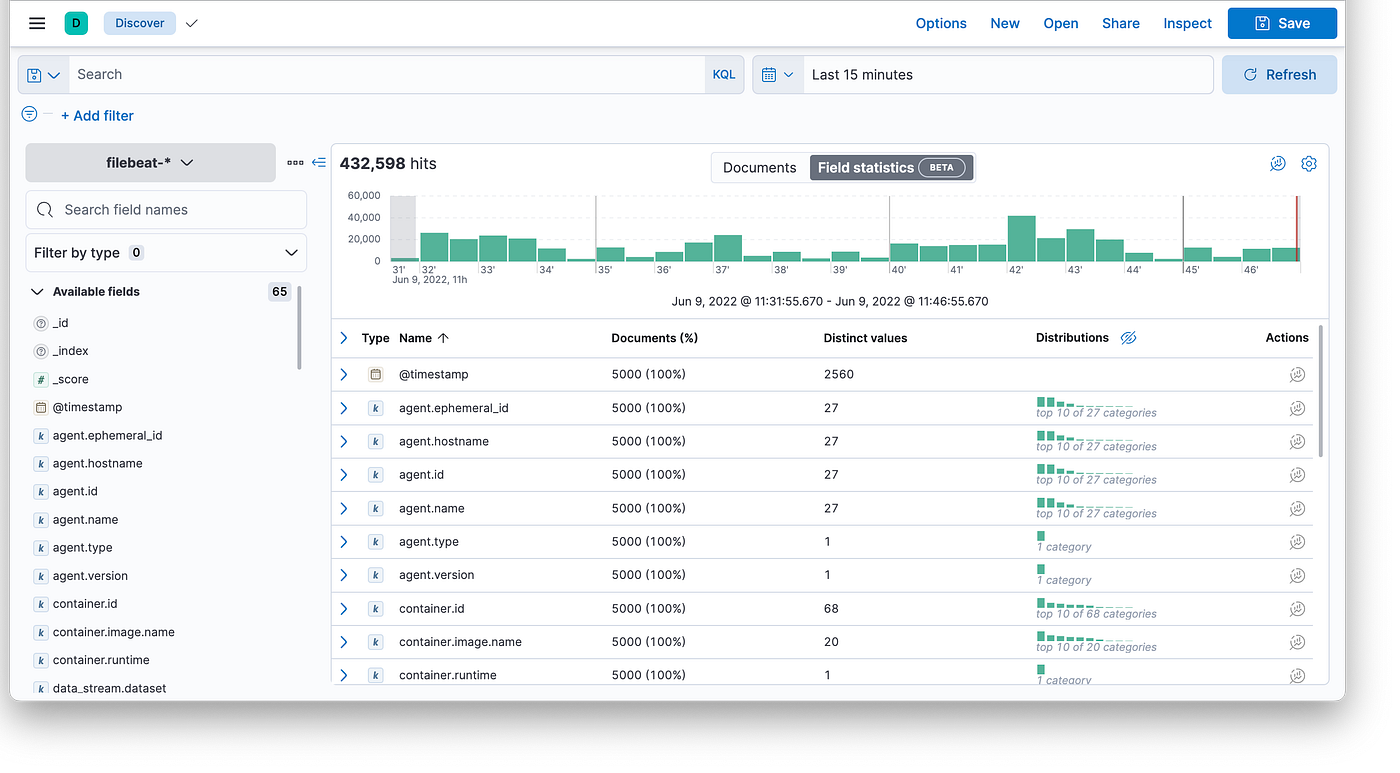

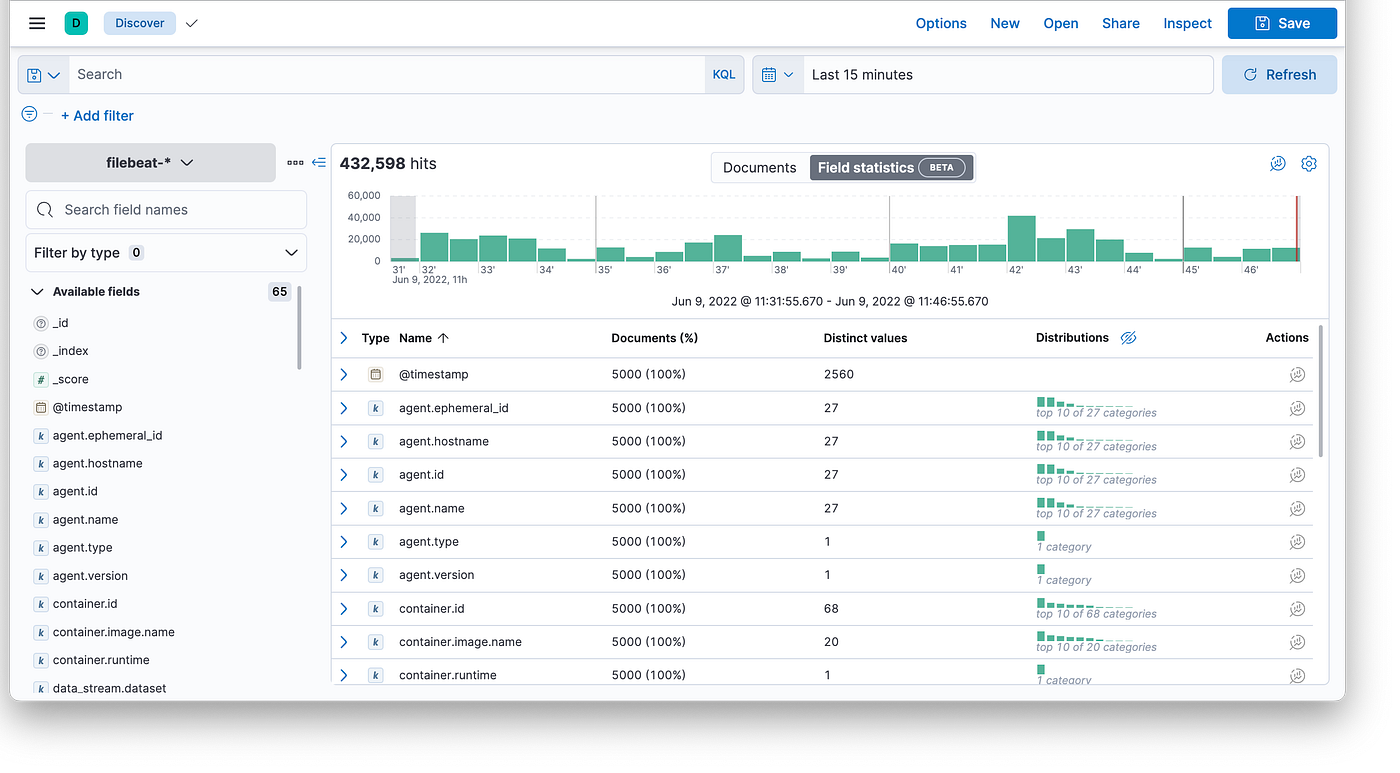

3. Let’s log in to Kibana with the user elastic and password that we got before (http://localhost:5601), go to Analytics — Discover section and check logs:

Last updated

Was this helpful?